Accuracy of Monocular Gaze Tracking on 3D Geometry

Abstract

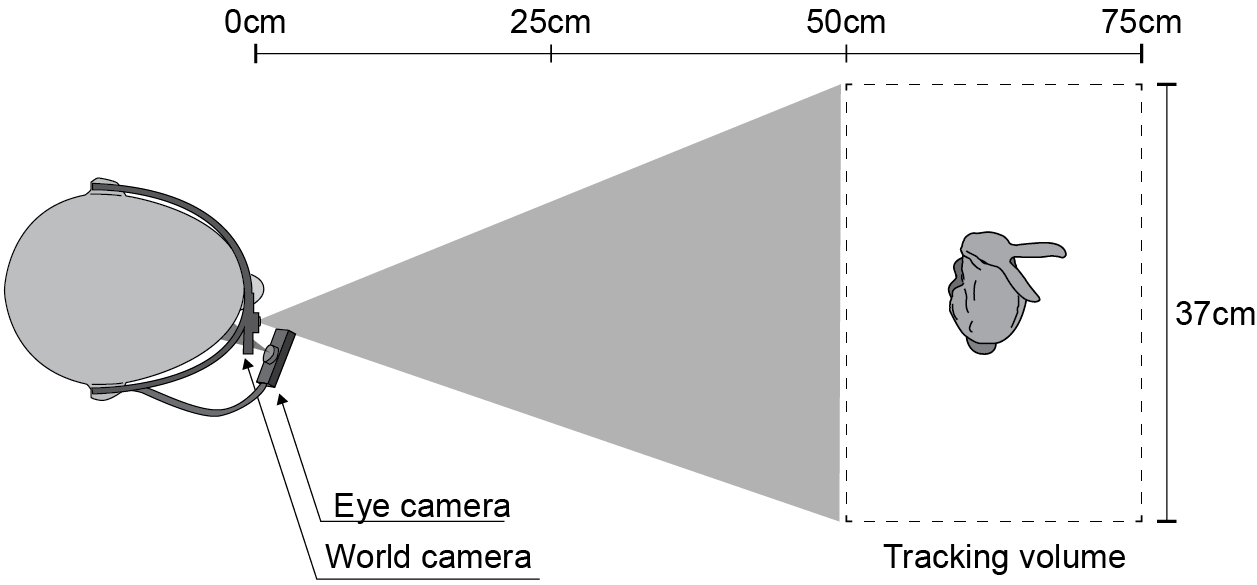

Many applications in visualization benefit from accurate knowledge of where a person is looking at. We present a system for accurately tracking gaze positions on a three dimensional object using a monocular head mounted eye tracker. We accomplish this by 1) using digital manufacturing to create stimuli with accurately known geometry, 2) embedding fiducial markers directly into the manufactured objects to reliably estimate the rigid transformation of the object, and, 3) using a perspective model to relate pupil positions to 3D locations. This combination enables the efficient and accurate computation of gaze position on an object from measured pupil positions. We validate the accuracy of our system experimentally, achieving an angular resolution of 0.8◦ and a 1.5% depth error using a simple calibration procedure with 11 points.

Download File "Accuracy of Monocular Gaze Tracking on 3D Geometry"

[pdf, 1.6 MB]

Reference

Wang, Xi; Lindlbauer, David; Lessig, Christian; Alexa, Marc. Accuracy of Monocular Gaze Tracking on 3D Geometry (Incollection). Workshop on Eye Tracking and Visualization (ETVIS) co-located with IEEE VIS, 2015.

Measuring Visual Salience of 3D Printed Objects

Abstract

We investigate human viewing behavior on physical realizations of 3D objects. Using an eye tracker with scene camera and fiducial markers we are able to gather fixations on the surface of the presented stimuli. This data is used to validate assumptions regarding visual saliency so far only experimentally analyzed using flat stimuli. We provide a way to compare fixation sequences from different subjects as well as a model for generating test sequences of fixations unrelated to the stimuli. This way we can show that human observers agree in their fixations for the same object under similar viewing conditions – as expected based on similar results for flat stimuli. We also develop a simple procedure to validate computational models for visual saliency of 3D objects and use it to show that popular models of mesh salience based on the center surround patterns fail to predict fixations.

Download File "Measuring Visual Salience of 3D Printed Objects"

[pdf, 3.1 MB]

Reference

Wang, Xi; Lindlbauer, David; Lessig, Christian; Maertens, Marianne; Alexa, Marc. Measuring Visual Salience of 3D Printed Objects (Journal Article). IEEE Computer Graphics and Applications Special Issue on Quality Assessment and Perception in Computer Graphics , 2016.

Tracking the Gaze on Objects in 3D: How do People Really Look at the Bunny?

Abstract

We provide the first large dataset of human fixations on physical 3D objects presented in varying viewing conditions and made of different materials. Our experimental setup is carefully designed to allow for accurate calibration and measurement. We estimate a mapping from the pair of pupil positions to 3D coordinates in space and register the presented shape with the eye tracking setup. By modeling the fixated positions on 3D shapes as a probability distribution, we analysis the similarities among different conditions. The resulting data indicates that salient features depend on the viewing direction. Stable features across different viewing directions seem to be connected to semantically meaningful parts. We also show that it is possible to estimate the gaze density maps from view dependent data. The dataset provides the necessary ground truth data for computational models of human perception in 3D.

Download File "Tracking the Gaze on Objects in 3D: How do People Really Look at the Bunny?"

[pdf, 23.2 MB]

Reference

Xi Wang, Sebastian Koch, Kenneth Holmqvist, and Marc Alexa. 2018. Tracking the Gaze on Objects in 3D: How do People Really Look at the Bunny?. ACM Trans. Graph. 37, 6, Article 188 (November 2018), 18 pages. https: //doi.org/10.1145/3272127.3275094